Testers Tell A Compelling Story

Abstract: If you’ve spent any time in the context-driven testing community, then you have probably heard the following directive: As testers, we must tell a compelling story to our stakeholders. But, what does this really mean? Are we just talking about a checklist here? Are we just trying to sound elite? Is this just some form of covering ourselves to prove we’re doing our job? Well, none of the above actually. The purpose of doing this is to continually inform our stakeholders in order to increase their awareness of potential product risks so that ultimately they can make better business decisions. We can do this by telling them about what was and what was not tested, using various methods. First we must level-set on the chosen language here and agree on the meaning of the words “compelling” and “story,” then we’ll dive into the logistics of how to deliver that message.

NOTE: I am also going to use the term “Product Management” quite often in this post. When I say that, I am referring to the people who end up doing the final risk assessment and are making the ultimate business decisions as it relates to the product (more about that here from Michael Bolton). This may involve the Product Owner on your team, or it may involve a set of external stakeholders.

Being Compelling:

The word “compelling” can seem a bit ambiguous and its meaning can be rather subjective, since what is compelling to one, is not so to another. What convinces one person to buy a specific brand, does not convince the person right next to them. However, regardless of your context, we need to set some guardrails around this word. The reason for doing this is to remain inline with the community’s endeavor to establish a common language so that we can properly judge what qualifies as ‘good work’ within the realm of testing, and in this case specifically, how good one is at telling a compelling story as testers. Yes, you as a tester should be constructively judging other testers’ work if you care about the testing community as a whole. We cannot do that unless we’re armed with the right information. So first, let’s take a very literal view, and then move forward from there:

“Compelling” as defined by Merriam Webster:

(1) very interesting : able to capture and hold your attention.

(2) capable of causing someone to believe or agree.

(3) strong and forceful : causing you to feel that you must do something.

The information you present should carry with it hallmarks of these three definitions, regardless of the target stakeholder’s role within the company. Let’s elaborate, specific to the context within a software development environment.

- (1) Interesting: As a tester, by being a salesperson of the product, and a primary cheerleader for the work the team has done, I am satisfying the first criteria. I know all the ins and outs of the product, thus being a subject matter expert gives me the ability to speak to its captivating attributes in order to draw my stakeholders into the discussion. (This also involves knowing your stakeholder, which I could write an entirely separate article about, explaining how you tailor your story for specific stakeholder roles within the company – more on that later).

- (2) Cultivate Agreement: As the tester for a given feature, you are aware of an area’s strengths and weaknesses. It is your job to take multiple stances, and defend them, be they Pros (typically in the form of new enhancements or big fixes) or Cons (typically in the form of product risks). Just like the defense given by an attorney in their closing arguments of a trial, so too should you defend your positions, regarding the various areas of the product that have changed or are at risk. Since you are informing on both what you did and did not test, then you can aid much better in joint risk assessment with Product Management. This is how testers influence the product development process the most; not in their actual testing, but in their conversations with those who make the business decisions when telling the story of their testing. Give your opinions a solid foundation on which to stand.

- (3) Take Action: All information that you give to stakeholders should support any action items they may need to take based on that data, thus you should be a competent professional skeptic in your testing process so that the data best leads Product Management toward fruitful actions. Your feedback as a tester is instrumental in well-intentioned coercion, or since that’s typically a negative term, let’s call it positive peer-pressure. Ideally your Product Owner is embedded or at least in constant communication with the scrum team, thus any actions that arise from this information will be of little or no surprise to the team.

On that note, surprises generally only occur when the above types of communication is absent which of course is not just limited to testers. Either user requirements are ambiguous or not prioritized (by Product Management), or perhaps there are some development roadblocks and test depth is not made tangible (by the Scrum Team). I use these three elements as a heuristic to prime the thinking of our stakeholders, so that they can make smarter and wiser product management decisions for the business.

Becoming A Storyteller:

It might seem obvious to say, but the best storytellers always include three main parts: beginning, middle and end. More specifically, characters, conflict and resolution. In a well-structured novel, the author typically introduces the reader to a new character for a period of time, for the purpose of initial development for the audience. Soon, a conflict arises, followed by some form of conclusion, perhaps including resolution of some interpersonal struggles as well. In testing, we want to develop the characters (feature area prioritization), overcome a conflict of sorts (verify closure of dev tasks and proper bug fixes based on those priorities) and come to a conclusion (present compelling information to Product Management about your testing). Just like an author describes a character’s positive traits as well as their lacking characteristics, we too should be sure that our testing story includes both what we did test and also what we did not test. Many testers forget to talk about what they did not test, and it goes unsaid, which increases the risk gap. This would be akin to an author leaving pages of the book unwritten, and thus open to interpretation. However, unlike a novel where a cliffhanger ending might be intentionally crafted in order to spur sales of a second book, omission of information to our stakeholders should never be intentional, and is not part of the art and science of testing. If this is done, the human brain will naturally fill in gaps with their own knowledge which may be faulty, or worse, make assumptions which can become fact if left unchecked for a long enough amount of time. The problem with assumptions is that they are grown within a hidden part of the brain, only knowable to that individual and typically do not expose themselves until it is too late. Leave as few gaps as possible by becoming a good storyteller. It can be dangerous when a tester becomes “used to” the mental state of not telling a story; believing that their job is simply defined by their test case writing and bug reporting skills as they currently exist. As testers, let us not be so limited or obtuse in our thinking when it comes to exploring ideas that help us become a better tester, otherwise our testing skill-craft risks being destined to remain forever in its infancy.

The Content Of The Testing Story:

Now, no matter how good a salesperson you might be, or how convincing and compelling you may sound to your various stakeholders, your pot still needs to hold water. That is to say, the content of your story must be based on solid ground. There are three parts to the content of the testing story that we must tell as testers: product status, testing method and testing quality.

- Product Status: Testers should tell a story about the status of the product, not only in what it does, but also how it fails or how it could fail. This is when we report on bugs found and other possible risks to the customer. Don’t forget, this report would also include how the product performs well, and the extent to which it meets or exceeds our understanding of customer desires.

- Testing Methods: Testers also tell a story about how we tested the product. What test strategies and heuristics are you using to do your testing and why are those methods valuable? How does your model for testing expose valuable risks? Did you also talk about what you did not test and why (intentional vs blocked)? Tip: Artifacts that visualize how you proritize risk testing can greatly minimize your storytelling effort.

- Testing Quality: Testers also talk about the quality of the testing. How well were you able to conduct the testing. What were the risks and costs of your testing process? What made your work easier or harder, and what roadblocks should be addressed to aid in future testing? What is the product’s testability (your freedom to observe and control)? What issues did you present as testers and how were those addressed by the team?

All three of these elements help us to make sure the content of our testing story is not only sound but also durable in order to hold up under scrutiny.

The Logistics of Telling the Story:

So, what is our artifact, that we, as testers, have to show for our testing at the end of the sprint? No, it is not bug counts, dev tasks or even the tests we write. Developers have the actual code as their artifact, which is compelling to the technical team, given it can be code reviewed, checked against SLAs, business rules, etc. As testers, traditionally our artifact has been test cases, but as a profession, I feel we’ve missed the mark if we think that a test case document is a good way to tell a compelling story. Properly documented tests may be necessary in most contexts, but Product Management honestly does not have the time to read through every test case, nor should it be necessary. Tests cases are for you, the testing team to use for the purposes of cross-team awareness, regression suite building, future refactors, dev visibility, etc, while it is actually the high-level testing strategy that really provides the most bang-for-buck value add for stakeholders in Product Management.

As far as the actual ‘how-to’ logistics of the situation, there are multiple options that testers should explore within their context. Since humans are visually-driven beings, a picture can say a thousand words, and the use of models provides immense and immediate payoff for very little actual labor. Now that we’ve established criteria for how to make a story compelling, and what the content of that story should be, let’s take a look at the myriad of tools as your disposal that can help with the logistics of putting that story together.

Test Models:

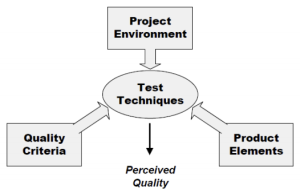

Models that help inform our thinking as testers, will inherently help Product Management make better business discussions. This influence is an unavoidable positive outcome of using models. The HTSM, Heuristic Test Strategy Model by James Bach (XMind Link), is a model that can greatly broaden our horizons as testers. If you are new to this model, then you can focus initially just on the Quality Criteria and Test Techniques nodes which gives you a ready-made template for testers that will not only help us become subject matter experts in telling that compelling story to our stakeholders but eventually just become part of our definition of what it means to be a tester, rather than feeling like this is extra work.

By using models in grooming, planning and sprint execution, a tester is able to expand on each node for the specific feature, as well as prioritize testing of each one using High, Medium and Low, as a way to inform Product Management of their tiered test strategy. This kind of test modeling can also be made visible to the entire team before testing even begins, allowing testers to be more efficient and communicative, better closing the risk gap between them and the rest of their team, namely developers. More often than not, developers somewhat solidify how they are going to code something in their head after their team planning sessions, so making the test strategy available to the entire team allows them to compare both sets of intentions, their own and the tester’s, with the outcome of squashing assumptions and exposing even more product risks.

Testing Mnemonics:

Mnemonics is a fancy term for acronyms that spell words or phrases that are easy for humans to remember. For example, FEW HICCUPPS is one: “H” stands for History, “I” for Image, “C” for Comparable Products, etc. SFDIPOT (San Francisco Depot) is yet another that is meant to prime our thinking about how to test. These mnemonics are structured in this way to allow our test coverage to be more robust, helping us fill gaps we would have otherwise missed; not because we are inept, but because we are simply human. Here are some other popular testing mnemonics that are used by the community that should help you with your test strategy to ease storytelling: Testing Menemonics

At CAST 2015, a testing conference that I attended in August, in Grand Rapids, Michigan, I listened to Ajay Balamurugadas talk about fulfilling his dream to finally speak at an international conference on testing. His passion for testing was infectious, and one of his suggestions was to pick a single mnemonic each day from that link above, and try it out. It takes only five minutes to understand each one, and then a time-box of 30-60 minutes to implement on a given story. Any tester who claims they do not have time to try these is doing one of the following, none of which are constructive: diluting themselves about the reality of time, intentionally shirking responsibility, confining themselves to their own cultural expectations or actively refusing to learn and grow as a tester. Try these out, see what happens. Use the ones that work for your context, and discard the others, but be sure to tell your team and other testers within your division what did and did not work for you, since sharing that information prevents others from having to reinvent the wheel.

Decision Tables:

I was reminded about how testers can use decision tables at CAST 2015 from Robert Sabourin, something I had not done since my days testing access control panels in the security industry, yet the concept can be easily applied when exploring software pathways and user scenarios. In my opinion, this is a more mathematical way to approach storytelling using boolean logic, but can be just as effective. The end artifact is still a somewhat thorough story of how you are going to conduct your testing, but it should be noted that decision tables do not account for questions raised during testing or areas that the tester will not test; so, these aspects should be documented along with the presentation of a decision table. While more mathematical than using more straightforward testing models like HTSM, and arguably less user friendly, this visual can still easily be explained to non-technical folks within Product Management. I suggest this here since this method may appeal to some minds that are more geared toward this type of thinking.

So, how does it work? In short, testers construct test flows and scenarios in this format that contains three parts: Actions, Conditions and Rules. These components, along with the expected outcome, True or False, determine the testing pathways. There is no subjectivity, as is with non-boolean expected results, since it is on of off, a 1 or a 0. This paints a very clear picture of how a feature has been tested, and implies that other scenarios are untested. This gives product owners both insight into your test strategy as well as awareness of potential risks that perhaps they had not yet foreseen as you are exploring the product for value to a customer perspective; albeit, a simulated customer perspective. Remember, we can never be the customer, but we can simulate click paths using established user user personas in our flow testing process.

Side Note: If you are not doing flow testing based on the established User Personas, then ask your Product Management team to provide those to you so that you can be doing better testing work in that area. Anyone conducting flow testing using their own self-created click-through paths apart from your established industry Personas may not be adding as much product value as they believe.

Why do this extra work?:

We should be able to qualify the testing we have done on the given feature in a way that is digestible by our stakeholders. Again, this is for the sake of increasing awareness, not simply proving that you ‘did your job’. If your Product Owner asks you, “What is your test strategy for Feature X?” then what would your answer be? Will you fall back on the typical response and just tell them you used years of knowledge and experience with the product to do the job? Or, will you be able to actually show them something that substantiates your testing from a high-level view that they can understand and garner real value? The latter, I hope. Believe it or not, your stakeholders need this information. Some may claim that they are ‘getting by’ just fine all this time without providing this extra level of storytelling, so they do not need to do this. I liken this argument to a swimmer saying he beat everyone in his heat, therefore he’s ‘good enough and doesn’t need improvement.’ First in your heat might be impressive, but in the greater competition, outside of that vacuum, those stats might fall flat when compared to the larger pool of competitors. Try to look through a paper towel roll and tell me you can see the full picture without fibbing a little.

On that note, it’s our directive as testers to be constantly learning from each other within the community, which most testers have yet to explore. We’ve all heard that teaching is ‘to learn something again for a second time.’ By forcing ourselves to use new cognitive tools to tell a story, we are also helping ourselves become Product SMEs, allowing us to be more thoughtful and valuable as testers. This not only benefits the company but your own personal career path as well. If interested, you can read more on that in my blog post titled, The Improvement Continuum.

Tailor Your Story:

Finally, there are multiple ways to tell the same story, and your methods should change depending on your audience. For example, we should not use the same talking points with C-level management as we would with our Product Owner. Since the relationship to the product is different for each role within the company, then the story should also be different. You would use the same themes obviously, but your language should be tailored to best fit their specific perspective as it relates to the product. In Talking with C-Level Management About Testing – Keith Klain – YouTube, Keith Klain discusses how different that messaging should be, based on your target audience. My favorite quote from that video is when the interviewer asks Keith, ‘How do you talk to them about testing?’ to which he replies, ‘I don’t tell talk about testing’, at which point he explains how we can discuss testing without using the traditional vernacular. Being aware of your audience should influence not only how you test but how you talk about your testing. I might be compelled to write another blog post specific to this topic; that is, if there’s enough interest in how to mold the testing story based on the various roles within your stakeholder demographic.

Conclusion:

It is common for Product Management and development teams to be two completely different pages when it comes to managing customers expectations. Developers and testers can lose sight of the business risks, while product owners and VPs can lose sight of the technology constraints. Ultimately, it is the job of Product Management to make the final call for deployment of new code, while our job as testers is to inform those management folks about any potential product risks related to the release. This is mentioned in the Abstract, but it is worth highlighting again; here’s a good blog post by Michael Bolton better exploring that tangent, Testers: Get Out of the Quality Assurance Business « Developsense Blog. Again, the purpose of testing is “to cast light on the status of the product and it’s context in the service of our stakeholders.” – James Bach.Testers tell a compelling story, but at the end of the day, your story should roll up to that. If it does not, then reevaluate if the information you’re telling is for your benefit, or your stakeholder’s. Be professionally skeptical and ask yourself questions: Is this worth sharing? Have I made it compelling enough to drive home my progress? Many Product Owners have not had any interest in what their tester documents because it has traditionally been of little value to them. Don’t use a Product Owner’s lack of desire as an excuse to stunt your own growth as a tester. Get good at this, and become a more responsible tester. While the failing of apathy is the responsibility of the entire team, we are at the helm of testing and have the power to change it for the better.

I’d like to hear your feedback, based on your own experiences of how you tell your testing story to your stakeholders. I’ve made the case for us, as testers, needing to tell that story. I’ve also gone one step further and provided you with models and other techniques you can use to get started putting this into action. I am eager to hear how you currently do this, as well as which parts interest you most, from the material I have presented here.